Techniques to Manage Huge Documents with Limited Token Size

Working with Large Language Models (LLMs) often presents a unique challenge: limited token size. When dealing with massive documents that exceed these limits, we need innovative approaches to extract insights without losing context. This edition explores three powerful techniques—Map-Reduce, Refine and Map-Rerank—to efficiently process large-scale text while staying within token constraints.

1️⃣ Map-Reduce: Simplifying Complexity

This method breaks down large datasets into smaller chunks, processes each chunk individually with an LLM and then combines the results to derive the final answer. The “Map” stage ensures scalability, while the “Reduce” stage synthesizes the results into a cohesive insight.

Best Use Case: When working with large documents where summarizing or extracting key themes is required.

2️⃣ Refine: Building Layered Answers

In the refine approach, the process starts with the LLM generating an initial response for the first chunk of data. This answer is then iteratively improved by feeding in subsequent chunks. Each step enhances the quality of the output, ensuring that the context evolves as more information is considered.

Best Use Case: Tasks requiring a nuanced understanding, such as crafting detailed reports or step-by-step problem-solving.

3️⃣ Map-Rerank: Optimising Relevance

The Map-Rerank approach evaluates multiple chunks in parallel, assigns a score to each result (based on relevance or confidence), and selects the highest-scoring output as the final answer.

Best Use Case: Information retrieval tasks, such as finding the most relevant answer to a specific query in large databases.

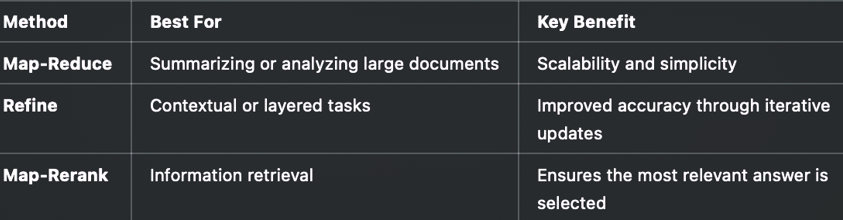

Why These Methods Matter

Each technique is tailored for specific scenarios, making it easier to handle large datasets without overwhelming the LLM or compromising accuracy. Here’s a quick summary of when to use each:

Let’s make AI work smarter, not harder!